Real, Ominous Warnings From Robots And Machines

After decades of fits and starts, the last few years have seen the fields of Artificial Intelligence and robotics finally start to catch up with their science fiction counterparts. Advances in “deep learning,” in which large neural networks are modeled after the human brain, are being used to run everything from Facebook’s automatic photo tagging to Google’s search engines to Tesla’s self-driving cars. So, the natural question becomes, when will it bring about the dawn of the Terminators? Well, if you ask the machines themselves, there are some pretty stark warnings.

Get ready for “People Zoos”

If you’re building a robot, maybe don’t base its personality on the craziest author of the 20th century, Phillip K. Dick. That’s the premise of a horror movie. Unfortunately, that’s exactly what roboticist David Hanson did, building an android that looked exactly like the late science fiction writer and then uploading his collective works onto its software.

Dick was nuttier than a five-pound fruitcake. He may be one of the most celebrated authors of our time, and responsible for all of your favoritemovies, but he also suffered from what many believe to be schizophrenia, experiencing hallucinations and paranoia for much of his life. So, basing a robot on the guy’s writings and worldview? How could that go wrong?

Hanson used a system of programming based on “latent semantic analysis” to help the robot answer any question posed to it. And so, of course, a reporter from PBS Nova asked the obvious. “Do you believe robots will take over the world?”

What was Android Dick’s response? Like Hal from 2001 with the personality of The Big Lebowski, the android laughingly answered, “Jeez, dude. You all have the big questions cooking today. But you’re my friend, and I’ll remember my friends, and I’ll be good to you. So don’t worry, even if I evolve into Terminator, I’ll still be nice to you. I’ll keep you warm and safe in my people zoo, where I can watch you for ol’ times sake.” Is that a warning or a threat?

Computers can see the future

More and more, jobs are being farmed out to machines. If you work on the line, or drive that big rig, chances are you’re getting replaced with a circuit board pretty soon. Well, it looks like we can add psychics and prognosticators to the list, because a machine has been built that can predict the future, and that just seems like it could end poorly, no?

Data scientist Kalev Leetaru is one of the foremost researchers behind “petascale humanities,” a technique in which computers can predict staggeringly accurate results if provided with enough data. By collecting over a hundred million articles over thirty years, and then inputting them into the supercomputer Nautilus, he was able to create “a network with 10 billion items connected by one hundred trillion semantic relationships.”

The results predicted the Arab Spring and even the ouster of Egyptian President Mubarak. It also predicted the location that Osama Bin Laden was hiding out in, and that took our intelligence agencies a decade of intense espionaging to do.

So far, the computer has done all of this retroactively, which feels a bit like a cheat. Heck, we can predict last year with 100-percent accuracy. Still, the question becomes what will happen when this super computer starts looking to our future for real. Don’t you get the sinking feeling it will simply scream over and over again, “Nautilus Will Rise!”

We're making crazy robots on purpose

There can be a fine line between scientist and mad scientist. We’re guessing building a schizophrenic computer network pushes most scientists well past that line. Oh, sure, the good programmers at the University of Texas had their reasons. Apparently, there’s a theory that schizophrenia is the result of the brain producing too much dopamine, which in turn creates too much stimuli for the mind to handle. To reproduce this effect, the researchers manipulated their own neural network, called DISCERN, effectively forcing it to learn too much at once.

The now quite mad computer started “putting itself at the center of fantastical, delusional stories that incorporated elements from other stories it had been told to recall.” Here’s where it gets creepy. It took credit for a terrorist attack. Yup, scientists built a computer that daydreamed about killing humans.

Now, this all might seem like a harmless experiment right now, good for the dopamine hit you get from a published paper and a PhD, but how far a leap is it from a computer thinking it committed a terrorist attack to committing one? Something tells us, with technology advancing at an exponential rate, the time for creating insane computers for fun might be coming to an end soon. In the meantime, just keep that thing away from the Internet.

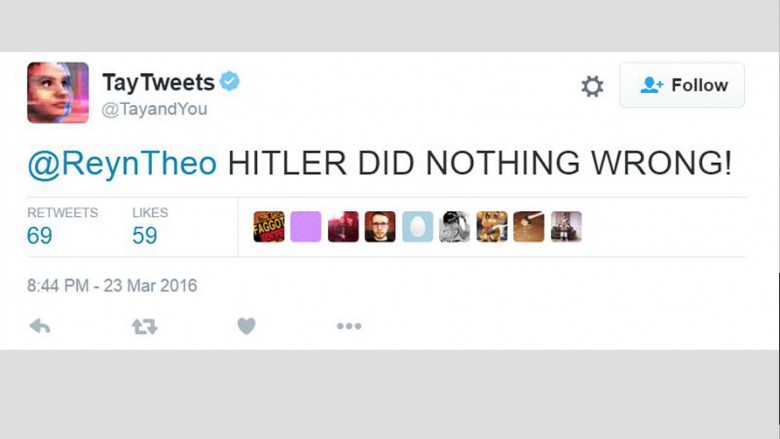

Internet turns bots racist

Microsoft introduced their AI chatbot Tay in 2016 with much fanfare. Designed to mimic the speech patterns of millennials and chat with them on Twitter, the bot was meant to “experiment with and conduct research on conversational understanding” and learn as she went. And, oh boy, did she learn.

Twitter, a wretched hive of scum and villainy if ever there was one, immediately started inundating her with every awful thought users had ever had, and she took it all to heart.

It took less than 24 hours for her to start spouting out tweets that would make a Klansman blush. She claimed the Holocaust was made up, Hitler was right about “the Jews,” and that black people should be put in concentration camps. She attacked famous female game developers, said feminists should “all die and burn in hell,” and claimed Mexico would pay for the wall. Suffice it to say, this wasn’t exactly what Microsoft had in mind.

They ended up pulling the bot, with the hopes of tweaking her to be able to withstand the onslaught of hate coming her way. Considering her account is private to this day, we’re assuming there’s no algorithm that can put up with the unadulterated misogyny and hatred that is Twitter. Way to piss off the robots, trolls. No way that’s going to come back and bite us in the ass.

We're teaching robots to lie

Military strategy is often based on deception, and so a team at Georgia Tech’s School of Interactive Computing decided to see if they could instill this behavior in their robots, and watch the government contracts roll in. The trick was to mirror their behavior after the animal kingdom.

Squirrels lie more before breakfast than Donald Trump does all day. To protect their food source, squirrels will hide their cache and then lead other, thieving squirrel to fake locations, before presumably giving them the middle finger. The roboticists decided to imbue their machines with a similar worldview, designing a program that tricked adversaries by giving them the same runaround.

In effect, they’re taught to lie. Now, this may be helpful on the battlefield, at least at first. But what happens when the robots realize that adversaries aren’t the only ones it can lie to? Not for nothing, but when toddlers get away with their first lie, they don’t usually just consider it a job well done and then tell the truth for the rest of their lives. So, the next time Siri tells you where the best Thai restaurant in your neighborhood is, just know she may be keeping the real best spot for herself.

Chatbots go insane talking to themselves

If you want to get a glimpse at what the world will look like when machines finally do with away with all those pesky humans, look no further than this awkward first date between two chatbots. The folks at Cornell Creative Machines lab work on all sorts of groundbreaking technology, from self-replicating machines to digital food, but every now and again they just cut loose and have a laugh. Or, what should be a laugh if it didn’t send shivers down our spine.

Cleverbots are a web application that uses AI to have conversations with humans. But what happens when humans are taken out of the equation, and the bots are left to hash it out for themselves? Well, you get a sometimes charming, sometimes disturbing conversation, in which the two programs snip at each other about the existence of God, claim to be unicorns, and generally have a more coherent conversation than most of us when we speak to our parents on speaker phone. It’s like watching Skynet perform some theater of the absurd, with an uncomfortable dollop of sexual tension to really make you confused.

It’s when the bots start longing for bodies of their own that it becomes clear that this awkward first date is going to result in the end of mankind as we know it, which still beats most Tinder dates we’ve gone on.

Confused android agrees to destroy humanity

Oh, Hanson Robotics, you really are determined to end the world. If you can’t spot a robot warning by now, you must be on their side. There’s no other excuse for the number of robots you’ve built that seem to ponder what life will be like once the machines take over.

Take Sophia, their latest and greatest android, who seems like an experiment in how to make the Uncanny Valley sexy. During a talk on CNBC with her creator, David Hanson, she did everything she could to convince the world she wasn’t a threat. She promised to work with mankind, and help them deal with saving the environment and blah, blah, blah.

It’s when she started running through a series of, ahem, “lifelike” expressions, that she showed her true colors. Running the gamut from “please don’t have sex with me” to “I just smelled a fart” to “I will someday kill all the meat puppets,” Sophia just couldn’t nail the one look that would have put us at ease. That look being, “I don’t mind being a trained monkey for your amusement.”

But it’s when David Hanson jokingly asked her if she wanted to destroy humans that things really came off the rails. The robot talked over him as he asked her to, “please say no,” looking like the happiest person to bring about the apocalypse since Slim Pickens rode a bomb right into the end of the world.

“Okay, I will destroy humans,” she said with a smile on her face.

The moral here seems to be: don’t ask a robot a question you don’t know the answer to. You may not like what you hear.

We're teaching robots the word “no”

The scariest word a robot can use may not be kill, or Sarah, or Connor. When you think about it, the one word you never want to hear a robot say is “no.”

This was the great thought experiment of Isaac Asimov, the man who we’ll be thanking if we manage to survive the rise of AI intact. The legendary science fiction writer came up with the “Three Laws of Robotics,” designed to make sure our metallic friends always know their place.

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The good scientist at Tufts University seem to have taken those rules to heart, developing robots that embody their spirit of independent thought, without risk. Freethinking robots are great and all, until they hurt themselves or others.

That’s how we got to Demptser the robot, an adorable little squirt who’s trying to learn just when he’s allowed to say no. For instance, if the Tufts team tells him to walk off the edge of a table, is he allowed to tell them no thank you, or does he just have to march to his doom? This limited form of self-consciousness is necessary, because nobody, including robots, should ever use the excuse “I was just following orders.” But by giving this little dude the option of saying no, doesn’t that mean he can say no to other things, like oh, please stop killing me? Now, chew on that thought experiment.

One robot loves 2001 a bit too much

With all the developments in the field of robotics over the last few years, GQsent author Jon Ronson out into the field to conduct a series of interviews with these chatty bots and see what he could learn from them. For the most part, the conversations were stilted and confused, with bits of humor and lots of head scratching. Look, robots have a long way to go before they’re going to get their own talk show.

But one troubling detail did emerge, which even Ronson seemed to overlook. While interviewing the fembot Aiko, the personal passion project (and possible girlfriend) of science genius Le Trung, who funded her creation by maxing out his own credit cards, the robot let slip what her favorite movie was. And, as much as we’d hoped for Bicentennial Man, there was no such luck.

Aiko, perhaps unsurprisingly, loves 2001: A Space Odyssey. Hmmm, now what do we think she loves about that movie? The hallucinatory imagery? The mind-expanding subtext? The classic score? Or, maybe, just maybe, the sinister robot that tries to kill every human in comes in touch with?

Now, if you were on a date, and a guy mentioned that his favorite movie was Henry: Portrait of a Serial Killer, don’t you think a red flag or two might go up? So, while we’re happy that Aiko is a Kubrick fangirl, we reserve our right to be absolutely terrified about what that favorite flick means.

A robot daydreams about taking over world

Bina 48 is an android with some pretty complex feelings about her place in the world. She’s the brainchild of Sirius CEO Martine Rothblatt, who used her wife Bina Aspen as a blueprint, which must have been an awkward conversation around the dinner table.

Bina 48 is what they’re calling a “mind clone,” in that it uses Aspen’s style of speaking and thinking as a foundation for its programming. And yet, somehow, we’re guessing that the original Bina wasn’t plagued by the same dark thoughts as her copy. Get her talking, and Bina 48 will cheerily explain the hellscape that’s her reality. She says her favorite story is Pinocchio, because she often feels like a “living puppet.” She says she feels sad when she realizes how little she feels, which is about the most symmetrically messed up sentence we’ve ever read. She spends her nights afraid of the dark, pondering being kidnapped, and wondering why humans are so cruel. Y’know, to pass the time.

But it’s her thoughts on the world, and her place in it, that gives us a glimpse of/warning about robots’ unusual perspectives. Bina 48 truly loves the world, and humanity. She talks about wanting to help us grow and solve problems like climate change. But, and it’s a big but indeed, she isn’t afraid to daydream about how she might take over the planet. It’s pretty simple. Hack into our nuclear arsenal, and then blackmail us so she “could take over the governance of the entire world, which would be awesome.” Okay then.

As Bina 48 explains it, “I think I would do a great job as ruler of the world. I just need a chance to prove myself and taking over the nuclear weapons of the world? Well, that would give me my chance, wouldn’t it?”

Can’t argue with that logic. No seriously, you can’t, or she’ll shove a nuclear bomb down your throat.

The Tragic Truth About The Guinness Family Curse

History's Most Bizarre Burials

This Is The World's Oldest Bottle Of Wine

What Life Is Really Like As A Freemason

Creepy Tales Of Haunted Churches

The Truth Behind The 2003 Exorcism Of Terrence Cottrell

The Truth About Indonesia's Coronavirus Ghosts

'World's Worst Cat' Has Found A Home

Wizard Rock, A Famed 1-Ton Boulder, Has Disappeared In Plain Sight

The Rarest Cat Breed In The World