The Untold Truth Of Deepfakes

Here’s a dystopic scenario to wrap your head around: What if video evidence was no longer reliable? What if an AI program had the capability to paste real people’s faces over other people’s bodies, to make them say, do, and emote things they never would in real life?

Unfortunately, this so-called “deepfake” technology is real, and if it sounds like a nightmare to you, you’re not alone. Governments, organizations, and innocent people across the world are all freaking out, too. Horrifyingly enough, realistic deepfakes are becoming increasingly easy to create. They don’t require a team of trained CGI artists: nothing but a total layman and a machine. And in a society that relies on truth, facts, and evidence to function, this technology poses a terrifyingly real risk to democracy itself, unless everybody trains their eyes to spot the difference between what’s real—and what’s fake. Here’s what you need to know.

Okay, so what are deepfakes?

Deepfakes are an emerging AI technology wherein computers are able to perform realistic human swaps in video footage, putting one person’s face—and/or voice—onto another person’s body. The effect can look nearly seamless to the untrained eye, thus creating scarily believable clips that look real, yet aren’t real at all. Now, sure, Hollywood has been perfecting CGI techniques for ages, but as Mashable points out, the difference here is that deepfakes don’t require a team, a studio, or millions of dollars. Anyone on the internet can download a deepfake program and make videos in their spare time, since the technology itself does most of the work.

Why is this dangerous? Picture this: a person makes damaging videos of you saying or doing something you never would. Even worse, imagine if a political figure was shown, on video, making controversial statements. To demonstrate why this would be harmful, Get Out director Jordan Peele teamed up with Buzzfeed to create a deepfake PSA featuring former President Barack Obama making some rather uncharacteristic remarks, before pulling back the curtain to reveal that Peele is the one actually saying them. The point, as Peele argues, is that people need to know about deepfakes now, and learn to be more careful about what they trust from the internet, before the technology spreads further.

How does this insane technology work?

Another frightening aspect of deepfake technology is that it’s not something that happens in darkly lit secret laboratories, or requires massive teams of experts. As Vice points out, the whole process is disturbingly mundane.

Basically, deepfakes are created using a GAN, or Generative Adversarial Network, a type of machine learning architecture which takes in a large amount of data, processes it, and learns to generate new samples of that data. So, for example, if you were to input a massive amount of footage of yourself, the GAN will—over the course of hours, days, and/or weeks—learn everything about your facial tics, mannerisms, and speech patterns, until eventually it can replicate you well enough to create a video that seems convincingly like the real thing. While this concept sounds like it belongs in the arsenal of a Marvel Comics supervillain like Mysterio, it’s now the stuff of science fact, rather than fiction … and it’s only getting better at what it does.

“Deepfakes” started on Reddit

The name of this technology is based on the Reddit user “deepfakes,” who began posting videos in 2017. According to Vice, deepfakes-the-individual—who remains anonymous—used open-source machine learning tools to create his videos, and extracted images from public databases like YouTube and Google Images. The scarily convincing quality of his videos hit the internet like a baseball bat … and, right away, raised serious ethical issues.

Why? Because not only were these videos NSFW, but their entire algorithm was trained to map the faces of famous women like Gal Gadot, Aubrey Plaza, Taylor Swift, and Scarlett Johansson, inserting them into sexual scenes, without their permission. Now, it should be obvious that this is a serious breach of consent: none of the celebrities agreed to having their image pasted into these adult entertainment clips, and neither did the original performers whose bodies were used. When the objectifying and/or immoral nature of this whole matter was addressed to deepfakes himself, though, he merely argued that he was just a programmer interested in exploring the technology, and that every technology could be used by people with sinister motivations.

The app goes viral, and legal questions emerge

Once deepfakes-the-individual started posting videos, everyone knew that it wouldn’t be long before the floodgates opened. By early 2018, Vice reported that deepfakes-the-individual had created an entire subreddit named (what else?) “deepfakes,” which quickly garnered over 15,000 subscribers … and, of course, inspired others to take the bait. Soon, another redditor designed an easy-to-use application called FakeApp, intended to make it easier for everyday non-programmers to create their own deepfake videos. This predictably resulted in more female celebrities having their faces pasted into explicit scenes that they didn’t participate in.

The adult entertainment industry got really upset about this: while the content they make will always be controversial, it’s supposed to be all about consent, and there’s nothing consensual about deepfakes. The issue of what anyone can actually do about these videos, though, is complicated. While celebrities whose privacy is violated in this manner could ask adult entertainment studios to remove their videos, and these studios have expressed that they would fully cooperate, this wouldn’t necessarily stop such videos from being pirated and spread. As far as legal remedies go, according to the Verge, probably the best route for violated individuals is to sue for defamation or copyright, which could at least force the original creator to face legal consequences for their activities.

The terrifying implications of deepfakes

Celebrities aside, what if a jilted lover created an embarrassing deepfake of their ex-partner, as revenge? What if a deepfake cost you a job interview? Or, what if someone used deepfake technology for blackmail purposes? These are serious issues. Also problematic is the likely possibility that deepfakes will soon be used to misrepresent public figures, according to Business Insider, such as when a 2018 interview of Congresswoman Alexandria Ocasio Cortez was cut and spliced to falsely make it appear that she didn’t know the answers to simple questions.

As CNN points out, the human race has, for over a century, considered audio and video technology to be the ultimate proof of reality. Videos are truth. Today, in a fractured society where some people believe the world is flat, others refuse to accept the existence of catastrophic real life tragedies like the Holocaust, and even public figures have told baldfaced lies under the claim of them being “alternative facts” (defined by Dictionary.com as “falsehoods, untruths, delusions”), it’s hard not to think that the arrival of deepfakes has come at the worst possible time. Right now, if the public isn’t quickly made aware of deepfakes, and taught to recognize them for what they are, today’s proliferation of bizarre conspiracy theories is only going to get worse.

Will social media fight back?

If you’re hoping for the big social media networks to swoop in, ban the videos, and save the day … well, don’t count on it. Facebook, known for being the internet’s bastion of privacy violations, has proven useless in this regard. In 2019, according to Business Insider, a deepfake of Mark Zuckerberg went viral on Instagram (which is owned by Facebook), wherein the Facebook creator seemingly brags about how he alone controls the future of billions of people, through access to their data and secrets. A fair argument, considering Zuckerberg’s not-so-squeaky-clean past, but there’s no way he’d say that aloud.

According to Slate, this Zuckerberg deepfake was created by two artists, Daniel Howe and Bill Posters, who wanted to test if Facebook would act to take down a deepfake of its top dog. The answer? No. The video stayed up. And hey, if Facebook won’t delete a deepfake of Zuckerberg, don’t count on it having your back, either.

Other big websites, thankfully, have taken action. Reddit banned the infamous “deepfakes” subreddit in 2018, according to the Verge, updating its rules to reflect the involuntary nature of this content. Twitter banned deepfakes, and around the same time, Vice reported that the image hosting platform Gfycat began actively removing deepfake content. This didn’t stop the videos from proliferating on other sites, of course, but it highlights just how irresponsible Facebook has been in regard to not making sure that the public doesn’t fall for fake videos.

The Pentagon gets involved with deepfakes

Considering the insanely dangerous ways that deepfakes can mess with society’s perception of reality, it should be no surprise that the U.S. Department of Defense is trying to stay one step ahead of the curve. According to CNN, the Pentagon is working together with major research institutions like the University of Colorado to battle the coming deepfake crisis, by developing programs that will hopefully be able to separate real and fake videos like a digital colander. To start with, though, they’ve been working on their own deepfakes. This way, they can better understand how the technology works, and thus how to outsmart it.

As the U.S. government races to combat the problem, though, Politico writes that lawmakers are already warning the public to be wary of bad faith individuals using deepfakes to influence future elections. Politicians on both sides of the aisle, from Amy Klobuchar to Marco Rubio, have publicly worried about the threat this technology poses to national security.

Could there be benefits to this new technology?

Maybe.

Just as any positive scientific breakthrough can be twisted for evil purposes, even a sinister technology like deepfakes has the potential to be used for good. In an interview with Mashable, Dr. Louis-Philippe Morency—the director of the MultiComp Lab at Carnegie Mellon University—offered the notion that this technology could be useful for PTSD therapy. As he points out, many soldiers who suffer from PTSD avoid treatment due to a perceived stigma, or an unwillingness to let anyone know they’re getting help: as the facial recognition capabilities of deepfake technology becomes more advanced, it could perhaps be transformed into a video conference system, wherein the soldiers would have artificial faces melded over their own. This would protect the patient’s privacy, while still allowing the doctor to read their expressions for emotional clues.

Another possibility volunteered by Dr. Morency is that job interviews could be done over this same sort of system: through facial mapping that hides the applicant’s true face, barriers like racial or gender bias might no longer be a factor. Fascinating as both of these possibilities are, neither of them quite take away the sting of how scary deepfakes are when it comes to issues of consent, propaganda, and facts.

How to spot a deepfake (I.E., can you?)

At some point, hopefully, the wide swath of researchers dedicated to this issue will figure out how to reverse-engineer deepfakes. In the meantime, though, it’s your duty—and the duty of everyone you know—to train your eyes to spot the difference between real footage and deepfakes.

How do you learn? Well, according to Slate, the best way is to watch lots of deepfakes. For example, look back on the proliferation of Adobe Photoshop edits in the last decade: as the years have gone on, you’ve probably learned how to spot fake photos, whether through specific signs or the fact that a picture simply looks “off” in some way. The same is true of deepfakes. The more you watch them, the more that your eyes start to automatically be clued-in to the telltale signs that it’s not real footage of a real person. Mouth movements look wrong. Faces are angled incorrectly. It all creates an uncanny valley effect that hard to place, but impossible not to notice … as long as you’re paying attention to it.

On a grand scale, society needs to quickly learn to handle video footage with the same skeptical eye that they currently turn toward photographs. It’ll be a weird process, but it’s a necessary one.

Scientists are working on it (but this problem isn't going away)

Scientists see the storm looming on the horizon, and so they’re working overtime to figure out ways to outsmart and expose deepfakes more easily, by developing new technologies and programs to suss them out. For example, Fast Company reports that research is being done at the University of Southern California Information Sciences Institutes (USC ISI) to create new software that, through AI, can debunk deepfakes with an accuracy rate of 96 percent. That’s pretty good.

However, while USC ISI researchers believe that at the moment they are way ahead of the deepfakers, they caution that the current technology is only going to get more advanced, and just like with hacking threats today, deepfake scams will probably become part of a continual “arms race” between those furthering the technology and those finding ways to shoot it down. The fact is, deepfakes are out there, and they’re not going to suddenly disappear. Pandora’s box won’t be closed.

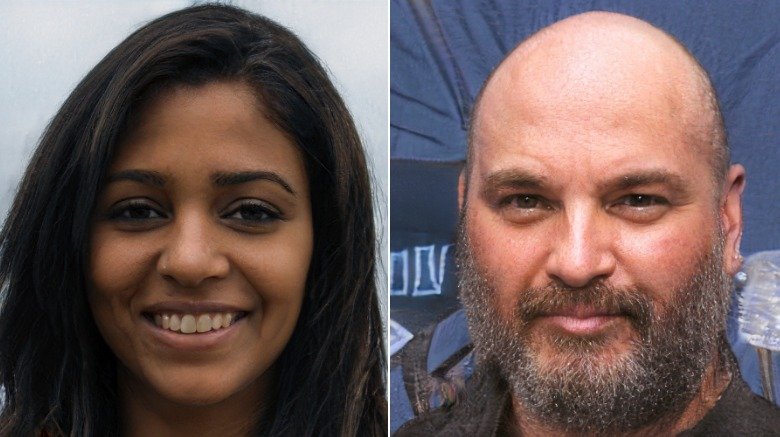

How the technology can create fake faces, fake cats … and fake Airbnbs?

By this point, you’ve probably grasped the exploitative possibilities inherent in deepfake technology. What you might not realize, though, is just how deep—and how strange—this rabbit hole can really go, according to Business Insider.

Recently, a number of bizarre new websites have cropped up which, rather than using GAN technology to swap two faces, are instead using an algorithm called “StyleGAN” to create entirely new, fictitious ones that aren’t real. Basically, if you go to one of these sites—for example, ThisPersonDoesNotExist.com—the A.I. will create a brand new, nonexistent face, modeled by combining features from numerous other faces stored in its database. What’s fundamentally creepy about this is, as the title proclaims, that the people who appear on the page don’t exist. For example, see those two faces above? No matter how familiar they look, they’re not real.

The weirdness doesn’t end there. The creator of the aforementioned site also developed a version called ThisCatDoesNotExist, which does the same thing for felines. There’s also, bizarrely, a version called ThisAirbnbDoesNotExist, which automatically generates fake Airbnb listings, including a description of the room, photos of it, and even a picture of the host.

Criminal usage of deepfakes is on the rise

Another unforeseen problem? Deepfake crimes.

Here’s how they happen, according to Fast Company: First off, a thief will train an AI program to replicate the sound of a CEO’s voice, whether by watching YouTube videos, phone calls, or what have you. Then, that thief will use the AI version of the CEO’s voice to to call a senior financial officer, and desperately request an immediate money transfer … right into the thief’s pocket. Silly as this process might sound, the cybersecurity firm Symantec has cautioned that three companies have already gotten hijacked by this very scam, resulting in millions of stolen dollars.

The fact that such thefts have been pulled off on big corporations is a scary indicator of how easily they could also be performed on individuals, provided the AI could get enough audio samples of your voice. Meanwhile, the possibility for such AI fakery to cause massive issues for the stock market is another scary issue that companies—and the world—needs to start preparing for.

Unfortunately, it's becoming easier (not harder) for people to create deepfakes

Deepfakes are not going away. With every passing month, in fact, they just become easier to produce. For example, Vice points out that in 2019, researchers from two major universities in Israel revealed that’d developed a program called FSGAN, which can create ultra-realistic face swap videos in real-time—on any two people, regardless of gender, skin tone differences, and so on—without the AI having to spend countless hours studying the person’s face. Way to make a scary technology even scarier, eh? If you’re wondering what the heck these guys were thinking, the development of FSGAN is actually fueled by the desire to develop effective counter-attacks for such forgeries: I.E., know thy enemy. The reason that they’re publishing the details of their work is because they believe that suppressing it wouldn’t stop such programs from being developed, but rather, leave the general public, politicians, and tech companies in a lurch, without a proper understanding of what they’re dealing with.

As far as that last point goes, there’s certainly no debate that deepfakes are getting more advanced, and that understanding them—whether through exhaustive research, watching deepfake videos, or even just reading articles like this one—is the key to fighting back against misinformation.

However, is society ready for the dangers ahead?

The Urban Legend Of The Corpse Bride

The Bizarre Book Written By The Son Of Sam

Mysteries Of The Mountains You've Never Heard Of

Here's What Happens During A Vatican Exorcism

What Heaven Is Really Like According To People Who've Been There

The Unexplained 2001 UFO Sighting Over The New Jersey Turnpike

Creepy Tales Of Haunted Museums

The Real Reason Jet Li Refused To Be Richard Nixon's Bodyguard

The Most Bizarre Things Found In Museums

The Strangest Footage Found On GoPros